Another new iOS 7 feature is built-in support for barcode-scanning via the AVFoundation AVCaptureDevice API. Back in 2012 I threw together MonkeyScan using Windows Azure Services and the ZXing barcode scanning library. For iOS 7 I've updated the code to use the Azure Mobile Services Component and the new iOS 7 barcode scanning API instead.

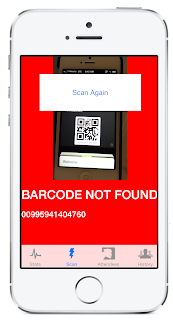

The app looks like this when scanning a PassKit pass:

The code that sets up an AVCaptureDevice for 'metadata capture' (as opposed to capturing an image or video, I guess :) is shown below:

bool SetupCaptureSession () {

session = new AVCaptureSession();

AVCaptureDevice device =

AVCaptureDevice.DefaultDeviceWithMediaType(AVMediaType.Video);

NSError error = null;

AVCaptureDeviceInput input =

AVCaptureDeviceInput.FromDevice(device, out error);

if (input == null)

Console.WriteLine("Error: " + error);

else

session.AddInput(input);

AVCaptureMetadataOutput output = new AVCaptureMetadataOutput();

var dg = new CaptureDelegate(this);

output.SetDelegate(dg, MonoTouch.CoreFoundation.DispatchQueue.MainQueue);

session.AddOutput(output); // MUST add output before setting metadata types!

output.MetadataObjectTypes = new NSString[]

{AVMetadataObject.TypeQRCode, AVMetadataObject.TypeAztecCode};

AVCaptureVideoPreviewLayer previewLayer = new AVCaptureVideoPreviewLayer(session);

previewLayer.Frame = new RectangleF(0, 0, 320, 290);

previewLayer.VideoGravity = AVLayerVideoGravity.ResizeAspectFill.ToString();

View.Layer.AddSublayer (previewLayer);

session.StartRunning();

return true;

}

You can specify specific barcodes to recognize or use output.AvailableMetadataObjectTypes to process all supported types.

...and it speaks!

Since the app now requires iOS 7, it can also use the new AVSpeechSynthesizer to speak the scan result as well (see previous post).

if (valid && !reentry) {

View.BackgroundColor = UIColor.Green;

Speak ("Please enter");

} else if (valid && reentry) {

View.BackgroundColor = UIColor.Orange;

Speak ("Welcome back");

} else {

View.BackgroundColor = UIColor.Red;

Speak ("Denied!");

}

The MonkeyScan github repo has been updated with this code.